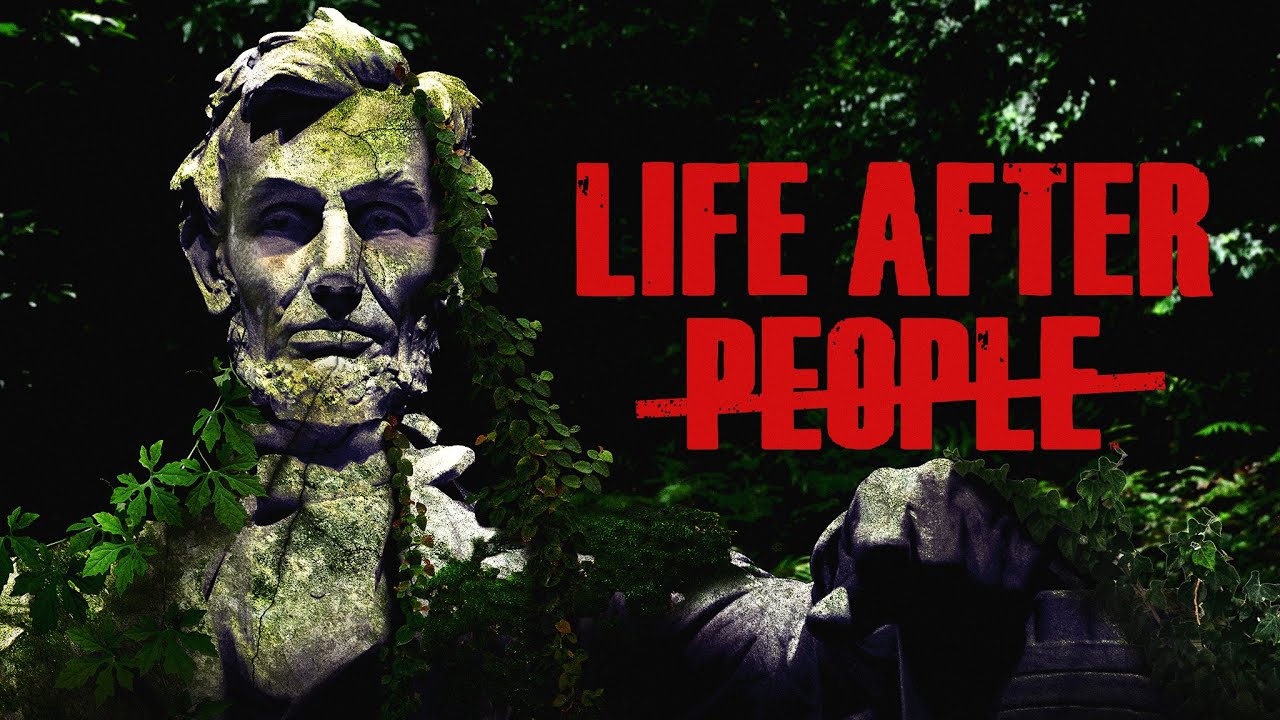

Tell us about the challenge you inherited on "Life After People."

The show explores what happens to the world when people disappear – things fall apart, crash and get destroyed, and this is happening all over the world. It would be hugely expensive and time-consuming to shoot on-location and use conventional VFX and editing methods. The original approach, with traditional tools, would have required a significant team of senior-level artists, working for weeks, just to get a sequence of flooding shots of New Orleans. And even with that, the results weren’t meeting expectations, and production was paused.

That’s when the showrunners came to Eggplant and asked if we could use AI to finish the edits. I took a look at the script, did a proof of concept and we went from there. With Runway, we were able to do in a few hours what would previously have taken a month.

What drew you to Runway specifically when evaluating AI video tools?

We looked at a few options, but it came down to the right mix of quality and enterprise-grade solutions. Unlike some of the other tools we looked at, it was clear that the product could deliver what we needed while fitting into our production pipelines. Other companies either couldn’t give us the quality we needed, or provide enterprise and legal support, or both.

How did you structure your workflow with Runway's tools?

Traditional tools are incredibly complex – there’s hundreds of levers you can pull and buttons you can press. Because Runway’s interface is so simple, and it was the first time many folks had worked with AI, we had to learn a completely new language as we iterated on our generations.

Especially early on, there was a lot of experimentation – for example, around verb tense. If you said someone "sparkles across the screen," you might get sparklers. But "this object is sparkling" would make it actually sparkle. We discovered that saying "the mall is closed" made all the people disappear because the AI understood conceptually that closed malls don't have people.

What specific Runway features proved most valuable?

The reference tools and Aleph were game-changers. Aleph was actually released during our final week of edits, and it was a life-saver. We had one last building in Mexico City that we needed to destroy, and the production leads wanted there to be more damage to the roof. Traditionally, we'd matte paint additional damage, track that new damage onto the Runway output in Nuke, then figure out how it fades into the dust storm that’s actually causing that damage – that’s at least four hours of comp work. Instead, I put it into Runway, said "more damage on the roof, please," and it was done. That made it into the show with just days left in production.

What were the biggest production challenges?

For what we needed, finding reference imagery was tough. We needed to destroy the Central Park Towers, but there’s no photos from above that look down at that building – at most, someone will take a photograph out of a top story window. We could get a basic 3D model, but it didn't look realistic, so we had to turn it into photorealistic matte paintings before even getting it into Runway.

Getting secondary motion was also a challenge, though Aleph made that easy once it launched. We used a combination of traditional tools alongside Runway – compositing in actual trees in a scene showing a hurricane, for example.

How has working with Runway changed your approach to VFX production?

It's allowed small projects that could never afford those kinds of shots to get them into their productions. You’re still using traditional tools and workflows, but you can do far more shots, and experiment until you get exactly what you want.

The worst thing that can happen is the viewer notices something that takes them out of the moment. AI, when not handled carefully, will instantly put someone in the uncanny valley. To get to that level of polish where you can suspend disbelief requires the same attention to detail as traditional VFX.

What's your advice for other production companies considering AI tools?

They should get a team together and put $10,000 into experimenting. If you don't do that, you're going to be left behind. The barriers are falling – this time next year it won't be a question of whether we can use AI, it'll be why wouldn't we.

What excites you most about the technology's future?

I'm working on a personal pilot project now – a 22-minute show that I'm producing essentially by myself using Act-Two and Aleph. Through Runway, I made myself into a cast of seven characters. It's very much like an animated series pipeline, a hybrid of animation and live production.

Every time I watch television now, I think "how could I have done that shot?" Some shots I could do today, others will take two more years of technology development. But for someone like me, it's allowing me to visualize films I had in my head that I never thought I'd get the budget to create.

Life After People was produced by Cream Productions, which collaborated with Eggplant Picture & Sound on the use of Runway.