Ezio Blasetti and Danielle Willems are pioneering the integration of AI video tools in architectural education and practice at the University of Pennsylvania. Blasetti brings expertise in computational design and digital fabrication, having taught algorithmic design since 2007. He recently joined NYIT as Assistant Professor in the Architecture School of Architecture & Design. Willems specializes in design studio instruction and architectural visualization, focusing on how architects translate concepts into buildable forms. Together, they're developing groundbreaking workflows that transform basic 3D models into complex, fabrication-ready designs using Runway's AI video tools – creating everything from academic prototypes to installations for the Venice Architecture Biennale.

Tell us about your approach to using AI in architectural education.

Working with AI for design and for architects is the new frontier for us. What's exciting is that there are no techniques to teach yet – we're just trying new tools and figuring it out together. Instead of specific techniques, we teach general methods and frameworks for exploring new tools and show how our work connects to the history of art, film, music and architecture. Our experiments started very early – we started teaching coding for designers back in 2007-2008. That slowly migrated into parametric design, as well as robotic fabrication – and more recently, into AI tools.

How did you discover this unique workflow using Runway for 3D design?

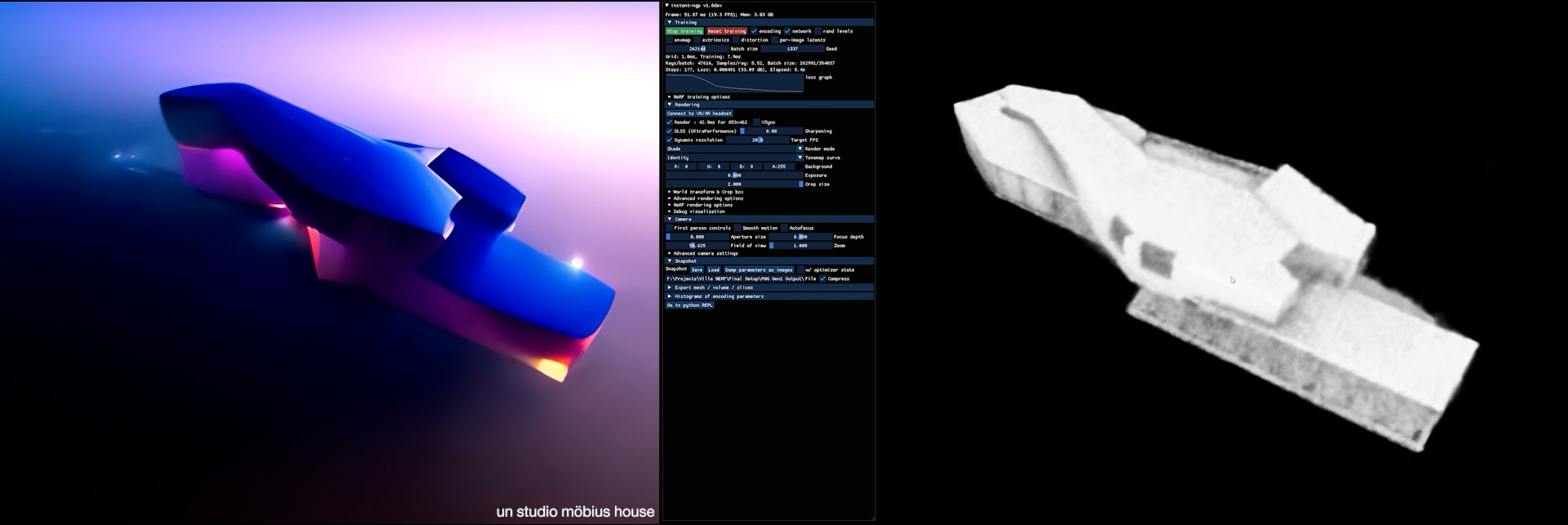

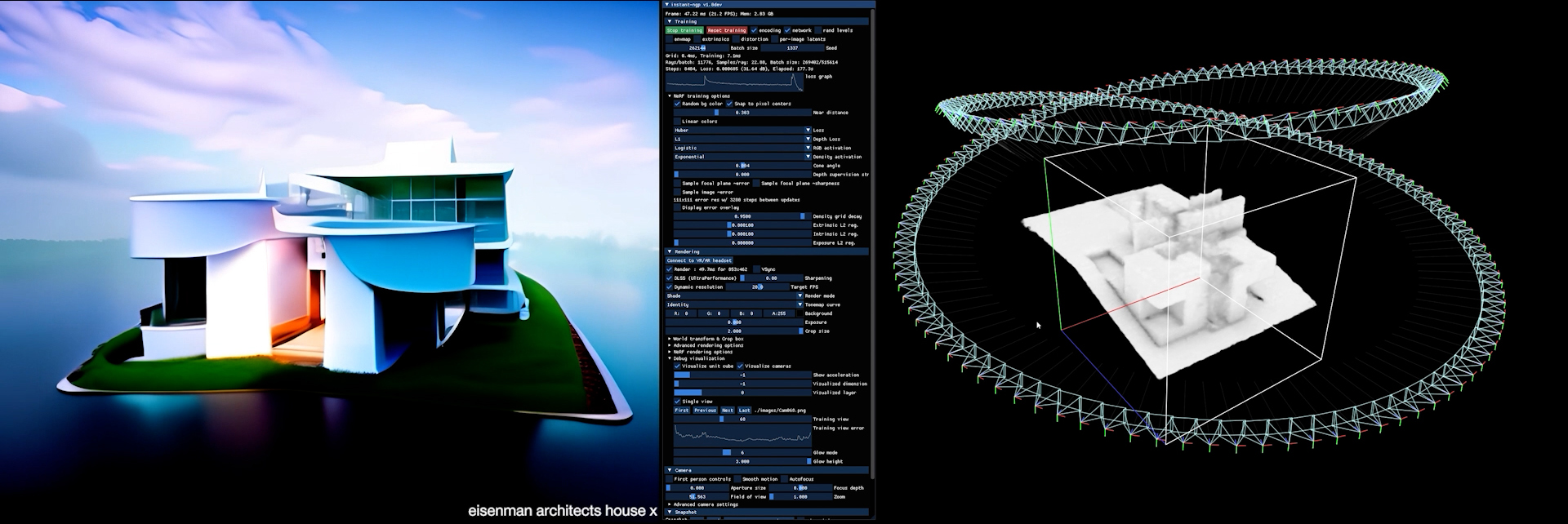

As architects, our interest in Runway comes from its ability to make three-dimensional objects, i.e. world building. Existing and legacy models that are focused specifically on 3D object creation are heavily biased towards those individual objects – it’s really hard to get them to deploy something that is more spatial. Typically those models give you clear contours of something rather than all of the different, more spatial effects, which is the difference between cinematic space versus avatar creation.

Walk us through your 3D workflow process.

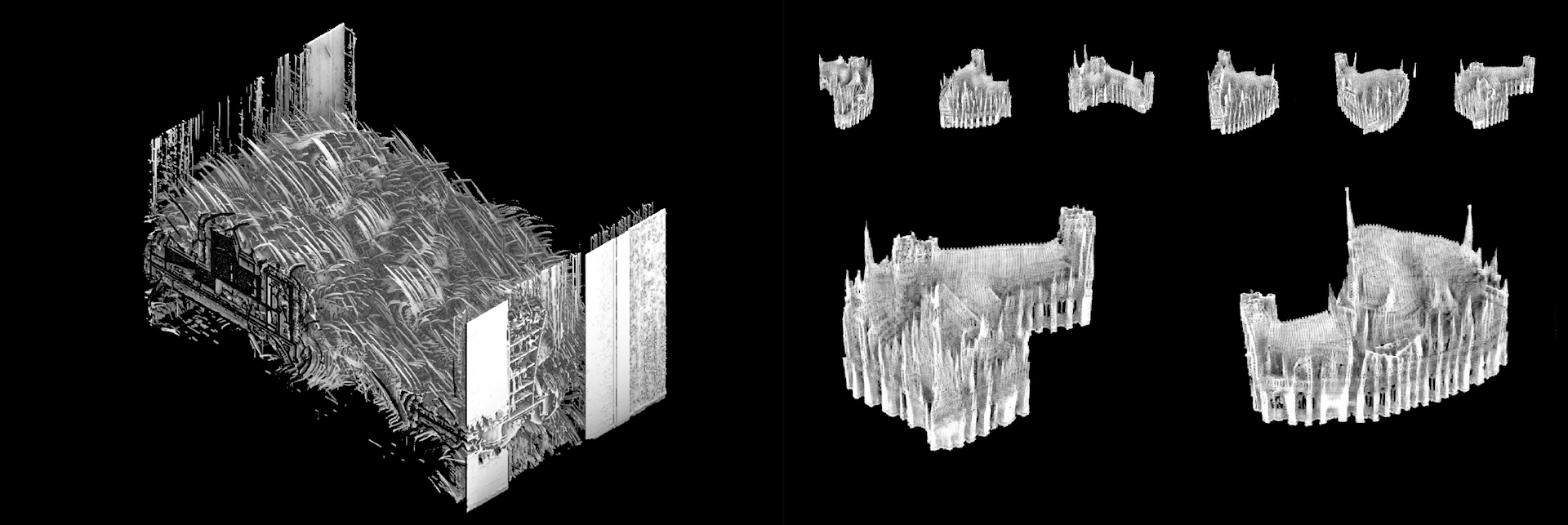

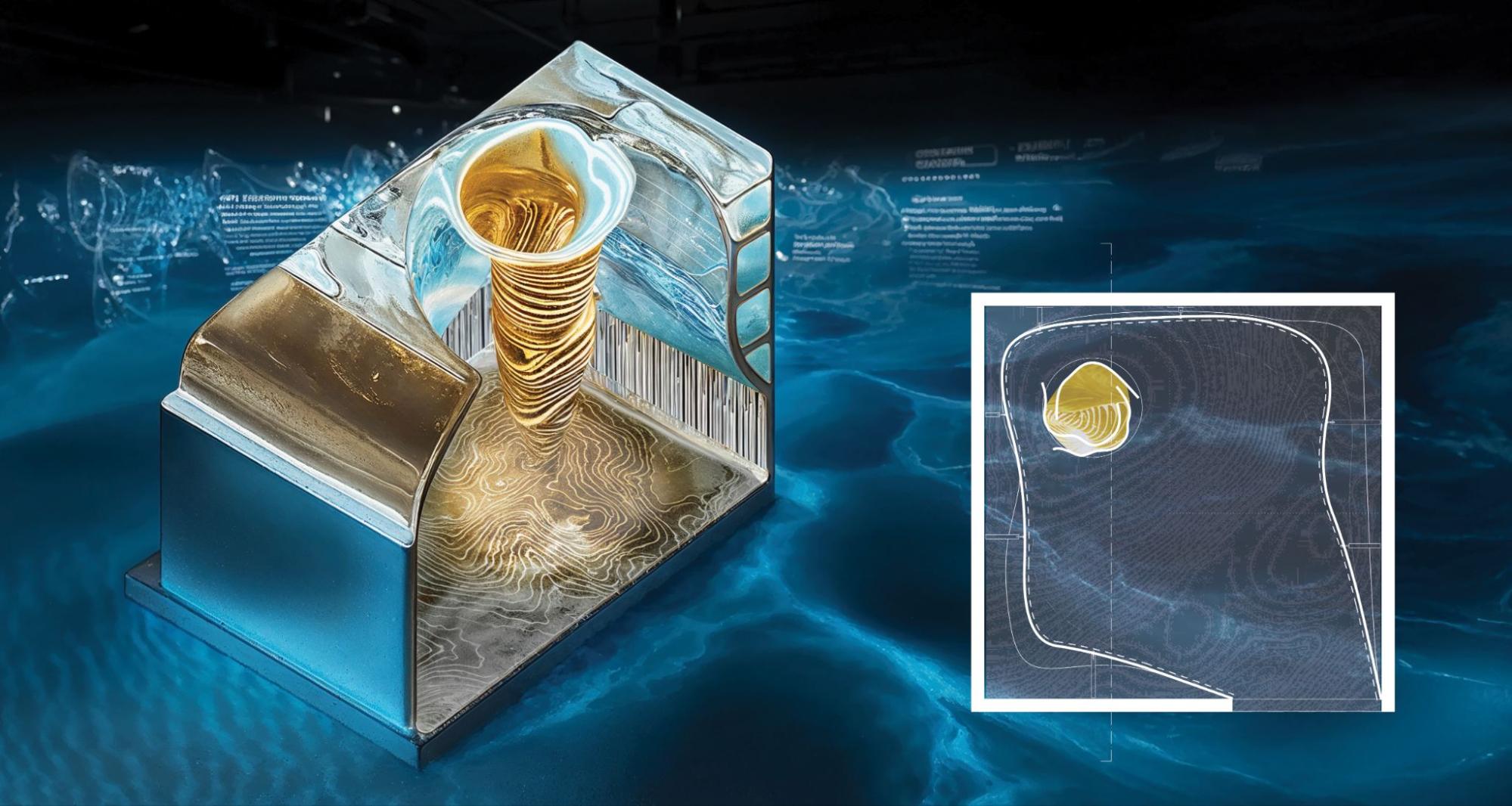

We start with a 3D model, but we have fine-tuned the exact position of the cameras. We export a video and apply a new style with Runway’s Video to Video. Then we reconstruct that 3D object with the new video output from Runway. So it goes from basic 3D software into Runway to give it more detail and more aesthetic properties, then it’s put into a neural radiance map where we end up with a highly detailed 3D output. Architectural designers usually make really rough designs that lack a lot of detail and it takes a lot of work to get from that rough design to something stylized and detailed. That's what Runway is really great at doing: adding style and detail in a way that can radically change and reimagine it in seconds.

What improvements have you seen as Runway's tools have evolved?

Our earliest work was with Gen-1, back in the first weeks after it was released. We were working with maybe 10 sketches and a couple of tools from Runway – the resolution was pretty low. Everything changed when Gen-3 and now Gen-4 came out and the consistency is a lot better.

How does this integrate with digital fabrication and real-world applications?

This is not just a conceptual model – this is something that can actually be fabricated in real life and sent to a 3D printer. In our case, we used this workflow to create a pavilion for the architectural Bienniale in Venice. We work in both robotics and architecture and ultimately this was fabricated with a robot that is able to weave the patterns that that design is proposing. It's a traditional robot—one that might be used for building cars in a factory—but with a custom-designed end effector to suit this use case.

In parallel, Danielle's seminar “The Function of Fashion in Architecture” explored similar workflows at the scale of the body. Using Runway’s Gen-3 and Gen-4 models, students generated full-scale dresses from AI-reimagined outputs and brought them to life through advanced digital modeling and hybrid fabrication techniques. The garments were showcased in a live fashion show at the University of Pennsylvania, presented through a cross-disciplinary collaboration between the Weitzman School of Design and the Wharton School of Business. The project demonstrates how generative AI can directly inform the design process – from initial concept to advanced fabrication.

What are you working on for the future?

One of the main reasons we connected with Runway, aside from the experiments and workflows we’ve been discussing, is that we’re exploring a new, cross-disciplinary AI concentration at NYIT’s School of Architecture. This course would span interior design, architecture and digital arts, including motion graphics, video and computer graphics. As a start, this concentration might look like like three different courses that focus on computer graphics and visualization for architecture, interior design and digital arts. It will probably also have a couple of dedicated seminars that look solely into AI as a research tool.