Machine Learning En Plein Air: Building accessible tools for artists

As an artist and a newcomer to the field of machine learning, I am both fascinated by what the technology can achieve and somewhat overwhelmed by its complexity. Nevertheless, while exploring ML’s creative uses and considering its potential applications, I found a helpful historical parallel that helped me make sense of what's to come. This inspiration stems from the explosion of creative innovation unleashed in the mid-1800s with the introduction of a new tool: the collapsible paint tube. Suddenly, artistic experimentation became accessible to creative individuals who had previously been shut out due to their lack of training and connection to master craftsmen, ushering in a major revolution in art and opening the doors for modern art. This time, it feels very similar. AI is a new paint tube that will unleash unprecedented crative potential. As Mark Twain once said: “History never repeats itself, but it does often rhyme."

Part One: Painting outside

Until the mid-seventeenth century, at least in France, painting techniques and procedures were developed and disseminated in a somewhat secretive, cult-like manner, being passed down through generations of elite artists. Groups of master painters tightly controlled access to the craft, instructing their apprentices through esoteric writings, some even dubbed “Books of Secrets”¹, which were available exclusively to a select few. One such elusive text was “Les secrets de reverend Alexis Piemontois.” It offered guidance on various topics, including crucial instructions on how a painter should prepare their pigments.

Creating, mixing, and maintaining paint involved a complex procedure that included grinding, mixing, and drying pigment powders with linseed oil², followed by storing them in a pig’s bladder, which was sealed with a string³. Mastering this technique often demanded years of training. Studio painting became the standard practice because the notion of painting outdoors, without all necessary instruments and tools, was deemed excessively burdensome. The pigments, once produced, had to be transported in individual glass jars, with one jar for every required color³. The limited color palette and the tedious preparation process substantially restricted the artists' portrayals and curbed their experimental endeavors. While some artists managed to navigate the challenges of outdoor painting, on-site or en en plein air painting was generally too impractical and complicated to gain widespread adoption.

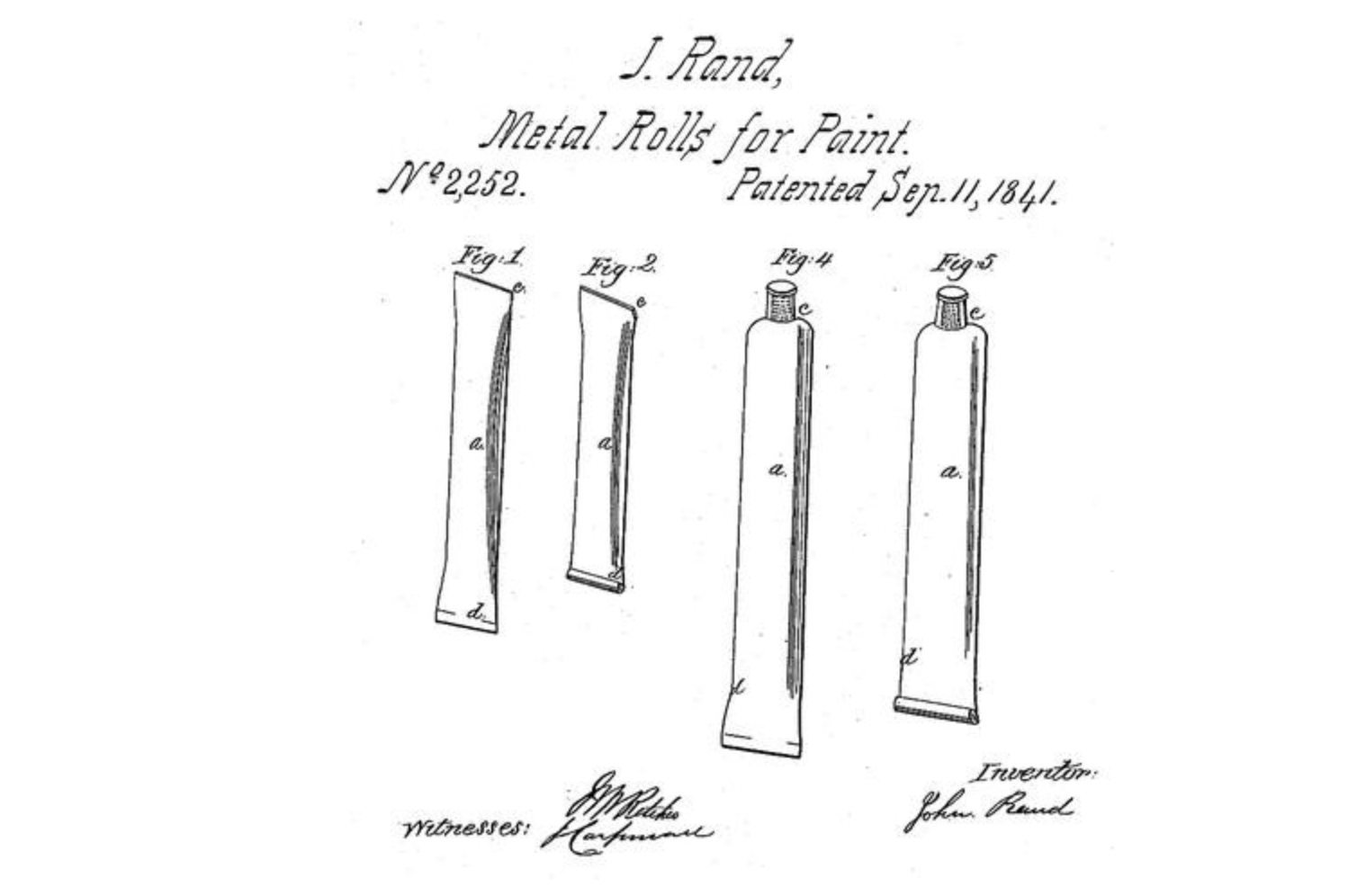

In 1841, an American portrait painter and inventor named John Goffe Rand introduced a simple invention that forever changed the way artists paint. Following a trip to Europe, he invented the collapsible paint tube: "Made from tin and sealed with a screw cap, Rand's collapsible tube provided paint with a prolonged shelf life, prevented leaks, and could be repeatedly opened and closed.

This simple yet highly effective invention enabled paints and oil pigments to become readily accessible to people. The availability of colors expanded, as creating pigments no longer constituted a time-consuming task, and pigments did not dry out as rapidly. Suddenly, tools and techniques that had once been difficult to access became mainstream.

After Rand's invention, painting outdoors, or en plein air, became not only possible but also encouraged. Renoir once said, "Without colors in tubes, there would be no Cézanne, no Monet, no Pissarro, and no Impressionism."³ A new breed of artists was now able to experiment with new techniques, colors, themes, and depictions, particularly focusing on capturing the effect of natural light outdoors. Artists such as Monet, Pissarro, Renoir, Constable, and J.M.W. Turner advocated for en plein air painting and experimentation.

Having better tools for painting granted artists greater freedom to explore topics, escape studio constraints, and draw inspiration from the natural world around them.

Modern Pigment Powders

Fast-forwarding 150 years, new generations of artists are now experimenting with innovative applications of digital technology in their work. I like to conceptualize these current endeavors as the outdoor paintings of the 21st century. However, just as their 19th-century colleagues struggled with making and using pigments before the advent of collapsible paint tubes—without access to extensive and exclusive training—artists today encounter difficulties in fully engaging with emerging technical fields and integrating the latest tools and technologies into their work due to the scarcity of tools designed with their needs in mind.

Artificial integillence techniques like machine learning and deep learnings are evolving into a pivotal technology in our society. While commercial, social, and political applications—such as speech detection and image recognition—are omnipresent, experimentation with deep learning is, to a large extent, confined to computer scientists and engineers. For an outsider with no prior computer science experience, attempting to understand and utilize modern machine learning techniques to explore their creative potential can feel akin to trying to grind, mix, and dry secret pigment powders with linseed oil just to create a painting.

R Luke Dubois has an excellent quote about the role of artists and how they should engage with technology.

“Every civilization, will use the maximum level of technology available to make art. And it’s the responsibility of the artist to ask questions about what that technology means and how it reflects our culture. ” — R Luke Dubois⁵

But to make art using a maximum level of technology requires, at the very minimum, access to said technology. Given that machine learning is poised to continue its evolution in complexity and segmentation, new strategies must be developed to unlock its potential for practitioners from various disciplines. Beyond artists, anyone with interest should be able to experiment with machine learning without the necessity to build and compile low-level, obscure C++ code. We require the machine learning equivalent of portable zinc tubes to be accessible for everyone’s experimental use.

Imagine the array of creative projects that could emerge from algorithms trained to generate images and videos with text or detect human poses in videos and images. If the Kinect catalyzed a new wave of exploration for media artists, what possibilities will research like DensePose unfold?

Meet DensePose! We introduce a new computer vision task of dense human pose estimation in the wild, which aims on mapping a RGB image of a person to 3D surface of the human body.

— Natalia Neverova (@NataliaNeverova) February 2, 2018

First results are in https://t.co/UOrHfa6Tvy, data and code are coming soon.https://t.co/V7IJYHY6cn pic.twitter.com/WDPVNpHkJk

Part Two: Building the right tools

I first delved into machine learning because I wanted to build creative, bizarre, and unexpected projects with this technology. This ambition was one of the reasons I decided to enroll in NYU’s Interactive Telecommunications Program (ITP) nearly two years ago. My inaugural project utilizing machine learning was developed for a class I attended with Dan Shiffman. It involved an app that narrated stories derived from a combination of pictures, utilizing a pre-trained neural network capable of generating captions. Subsequently, utilizing that same model and as part of another class with Sam Lavigne, I created a tool to generate similar semantic scenes from a pair of videos. The user inputs a video and receives a scene with similar meaning from another video. I was captivated by what just one machine learning model could achieve.

I made a tool that uses machine learning to describe the content of videos and suggest similar scenes in other videos/films.

— Cristóbal Valenzuela (@c_valenzuelab) December 7, 2017

Try it here: https://t.co/Kd2x4UEauo pic.twitter.com/ujhJUofFSK

I also had the the chance to collaborate with Anastasis Germanidis. Together we built a drawing tool that allows users to interactively synthesize street images with the help of Generative Adversarial Networks (GANs). The project uses two AI research papers published last year as a starting point (Image-to-Image Translation Using Conditional Adversarial Networks by Isola et al. and High-Resolution Image Synthesis and Semantic Manipulation with Conditional GANs by Wang et al.) to explore the new kinds of human-machine collaboration that deep learning can enable.

👨🏻🔬 New experiment: Uncanny Road is a tool for collectively synthesizing a never-ending road with the help of Generative Adversarial Networks. Collaboration with @c_valenzuelab. https://t.co/aRShbYwfhV pic.twitter.com/ZxobdmPqqH

— Anastasis Germanidis (@agermanidis) December 27, 2017

One recurring challenge I continually face while working with machine learning echoes the pigment problem described in Part One above: The tools often seem too complex to even attempt to use and are subject to the “Just install something” assumption. The developers of the tools either presuppose that every user comes from a uniform background or they necessitate a wealth of knowledge about low-level internal functionality. Consequently, in my endeavors to utilize machine learning models and techniques, I found myself constructing tools in an effort to simplify the underlying systems while simultaneously enhancing my understanding of the topic. The impact has been twofold: I have been actively learning while concurrently building a tool to abstract its complexity.

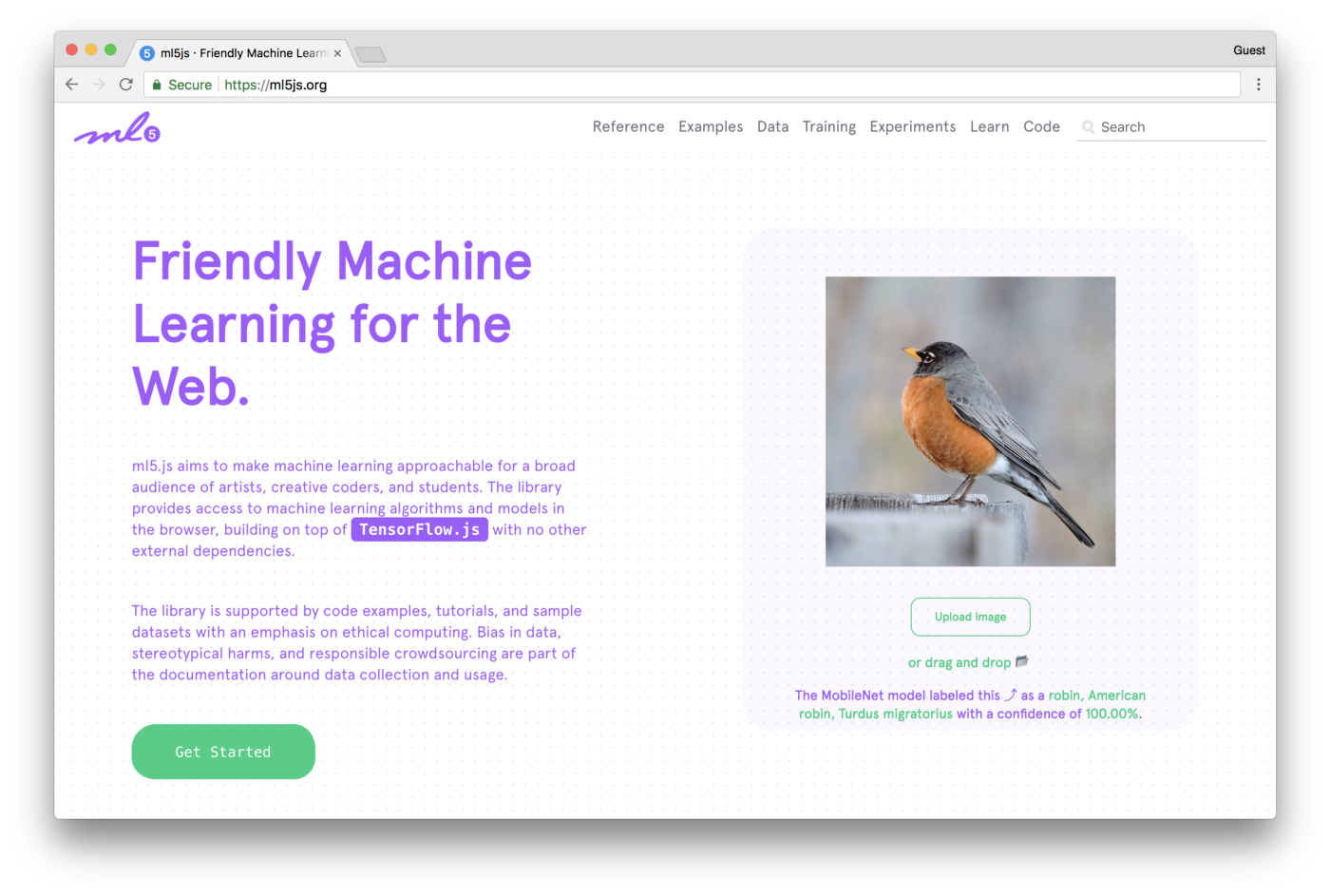

One of the tools I have helped to build aims to simplify machine learning for the web. ml5.js, a JavaScript library powered by tensorflow.js provides a more user-friendly interface for web-based machine learning. I have been assisting in developing this project under the guidance of Dan Shiffman, and in collaboration with an outstanding group. The primary goal of ml5.js is to further reduce barriers between lower-level machine learning and creative coding in JavaScript. As JavaScript is rapidly becoming the entry point for many new programmers, we hope it can also serve as their initial gateway to machine learning.

I have also explored the potential of applying principles from ml5.js to other creative frameworks and environments. A significant portion of this endeavor has been directed toward posing questions about utilizing machine learning in various creative workflows. For instance, can contemporary machine learning techniques be employed to support, generate, or comprehend a creative process? How might a graphic illustrator benefit from a deep neural network capable of describing image content? What could a 3D animator do with a neural network trained to generate speech? Can a musician craft sound using an algorithm that creates photorealistic portraits? How should or could individuals with limited experience engage with machine learning in an optimal manner? I have been exploring these types of questions through my ITP thesis project, Runway, and would like to succinctly discuss some of my discoveries and creations thus far.

But first: Models.

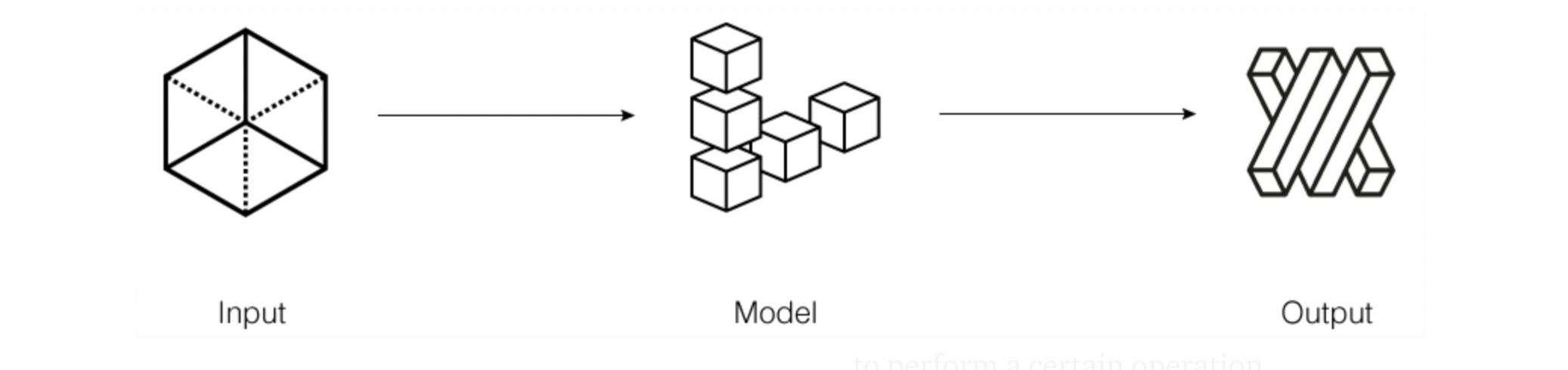

For the most part, machine learning involves a sequence of steps engineered to generate a model representation of a data distribution. A model represents a machine-learned abstraction of the input data. Consequently, a model designed to recognize faces must first be trained using datasets encompassing a wide variety of faces. Once trained, it should be able to recognize faces from unseen pictures. This model becomes a highly abstract representation of the input data, now proficient in recognizing faces. In reality, the process is somewhat more complex. It involves collecting a substantial number of data points, creating training, validation, and test datasets, selecting an appropriate architecture, choosing the right hyperparameters, and then training your model, preferably using a graphics processing unit (GPU) for faster results.

People have trained and built models for many different purposes, including models to recognize emotions, objects and people, body positions, hot-dogs, spam and sounds; models to generate photorealistic faces, captions, streets, facades, voices and videos of airplanes morphing into cats; models to detect cancer, malaria and HIV. Some trained models are ethically dubious and raise important questions about the social impact of algorithms and biases in our society such as models used to segregate neighborhoods or predict crime.

However, not all models are created equal. Developers construct models using various programming languages, different frameworks, and for diverse purposes. Regardless of their construction, these models constitute the most pragmatic aspect of machine learning and ultimately, the thing that matters the most. Models are what you can use to build a company around, create contemporary looking art and power search engines.Essentially, models encapsulate the essence of machine learning. They are the painting pigments of the machine learning realm, serving as the tools employed to craft art and business. Nevertheless, while many models are open-source, they are often enclosed within a metaphorical pig’s bladder: challenging to use, expensive, and time-consuming to set up.

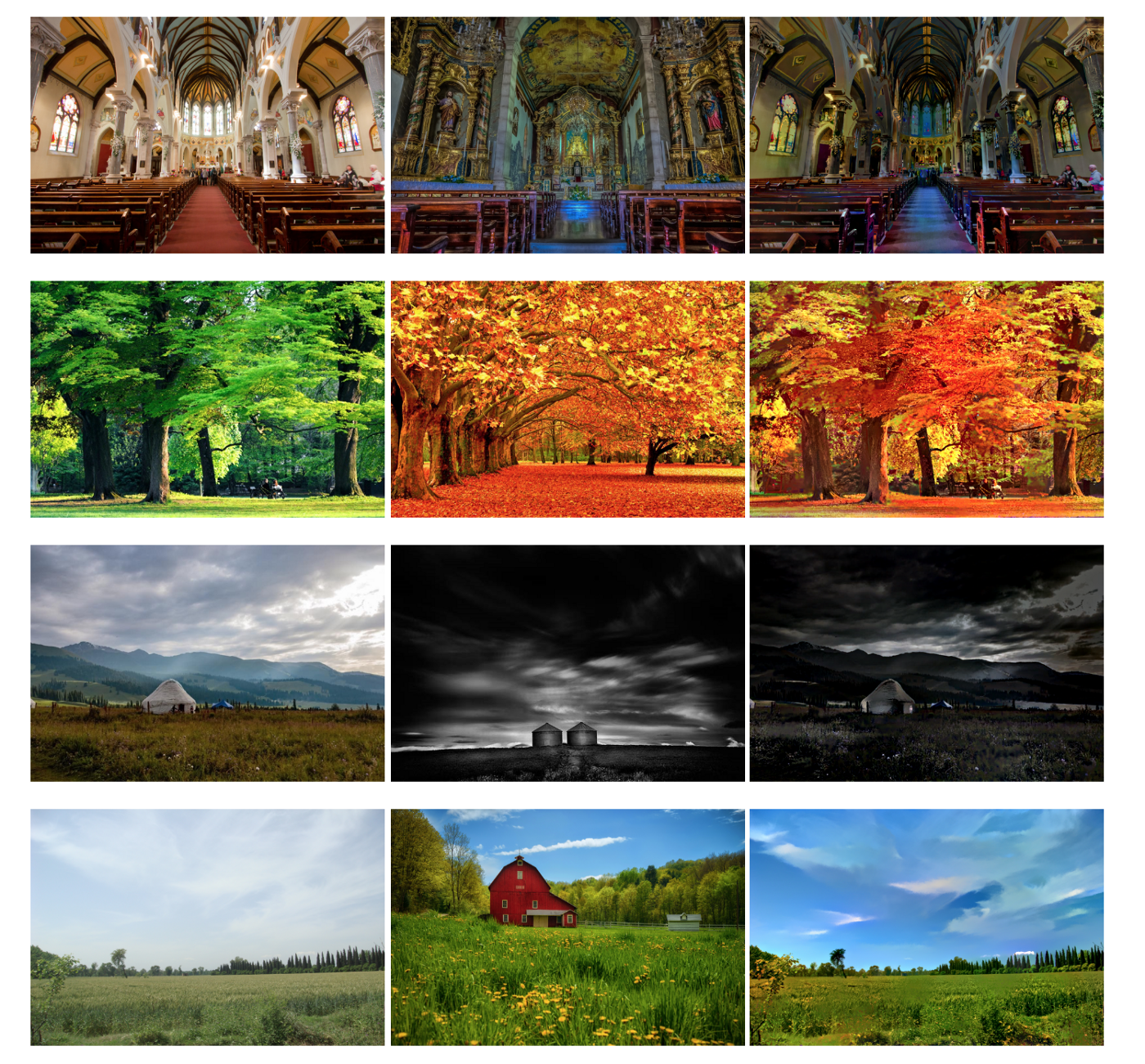

I’ve experienced the frustration of trying to learn about and use models ever since I first became interested in machine learning. To put things in perspective, a good example comes from academic research like Deep Photo Style Transfer, where the style of one image can be applied to another to generate realistic new images, which has a lot of creative potential. You can basically generate new images from a pair of two distinctively styled images. Imagine a Kubrick scene remade in the style of Wes Anderson. Or, the sketch of an artist being transformed through real-time image conversion into any artistic style or period she or he wants.

Runway

My ITP thesis project, Runway, serves as an invitation to explore these concepts. If models constitute the building blocks of machine learning, how might we devise simpler tools for accessing them? What prerequisites should be in place to operate and utilize models like Deep Photo Style Transfer? If we were to create contemporary zinc paint tubes for digital artists, what form might they take?

🎉 Excited to share the project I've been working on in recent months @ITP_NYUhttps://t.co/FELiQjTVLb: easily run machine learning models and use them in creative and interactive ways.

— Cristóbal Valenzuela (@c_valenzuelab) April 9, 2018

Curious? Here’s a demo of OpenPose+@ProcessingOrg

Interested? Looking for beta testers, DM pic.twitter.com/hrp42oiD6g

Runway aims to simplify the process of utilizing state-of-the-art machine learning models as much as possible. While understanding the data behind these models and the training process is crucial, this project does not focus on establishing the optimal training environment to deploy models to production. It isn't about training an algorithm, nor is it about hyperparameters or in-depth data science. Instead, the project is founded upon the straightforward concept of making models accessible to people, enabling them to conceive new applications for those models. Consequently, they can gain a deeper understanding of how machine learning operates through a process of learning by doing.

In Runway, inputs serve as mechanisms to prompt a pre-trained model to execute a specific operation. A model processes the inputs, subsequently producing outputs. These results can be utilized in any desired manner. The models are open-source, and you can select from a collection of trained models. Runway provides a library of models you can use any way you want. Moreover, Runway enables users to link the inputs or outputs of those models to other software.

For example, you can operate a model trained to detect human body, hand, and facial key points and transmit the results of a live webcam stream to a 3D Unity Scene, a musical app running in Max/MSP, a sketch using Processing, or a website utilizing JavaScript. Abstracting the optimal and most efficient method of operating a machine learning model allows creators to focus on employing the technology rather than navigating the learning curve or setup of the technology, effectively redirecting their energy and time from tool setup to creation.

Experimenting with real-time pose detection to sonify a live stream of a sidewalk. My final project for the awesome interactive music class with @yotammann @ITP_NYU pic.twitter.com/vhJ0cMikXf

— Cristóbal Valenzuela (@c_valenzuelab) March 28, 2018

A few weeks prior to my thesis defense and after developing a working beta version of Runway, I issued an open call, inviting anyone interested in trying the app. A primary concern of mine revolved around understanding how people would engage with a tool like this and whether they would find it useful at all. Fortunately, the response was overwhelmingly positive, not just from artists but also from designers, teachers, companies, and individuals curious about machine learning in general. To maintain manageability, I selected approximately 200 beta testers. Within just a couple of weeks, people began constructing interactive projects using state-of-the-art machine learning models, which may have seemed inaccessible before. These projects included building gaze and pose estimations in Unity and experimenting with object recognition in openFrameworks and JavaScript.

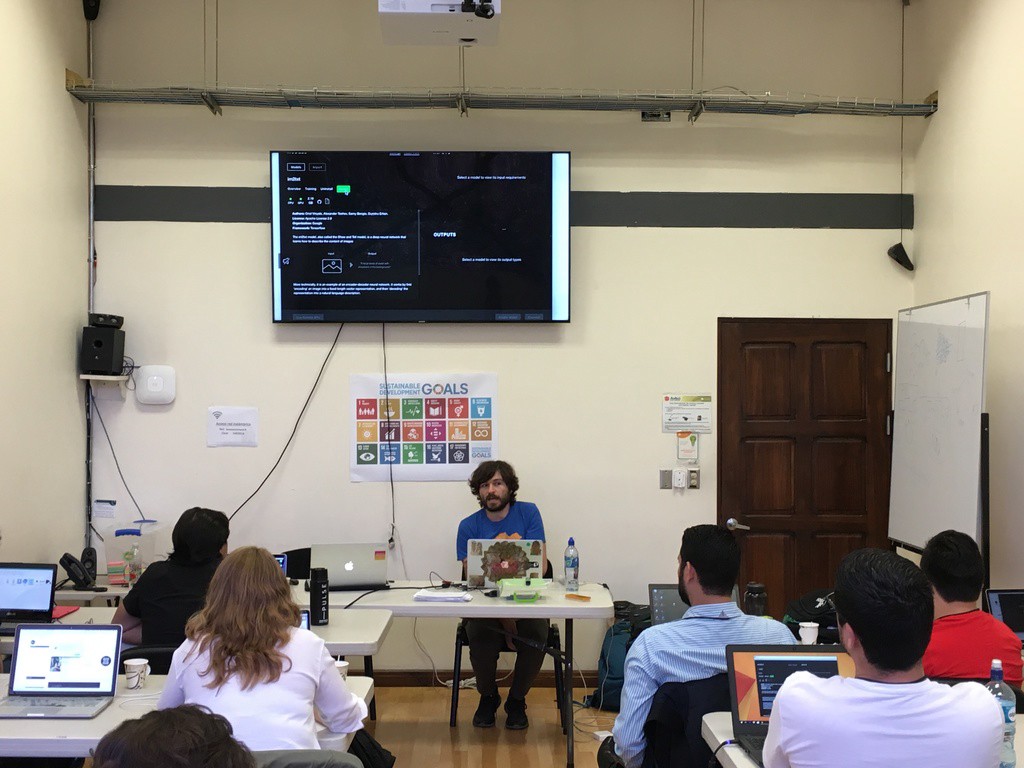

Unexpectedly, I discovered how beneficial the tool could be for machine learning educators. Since Runway manages the lower-level complexities of ML, educators can focus their time on explaining a model’s architecture, emphasizing the importance of collecting datasets, and discussing potential use cases. Most importantly, they can enable their students to build fully functional projects using machine learning.

Runway is an invitation to artists, and others, to learn about and explore machine learning through more accessible tools. Machine learning is a complex field that will likely continue to impact our society for years to come, and we need more ways to give more people access. Just as an innovative technical invention allowed the Impressionists to discover en plein air painting and begin to explore and understand new and uncharted territory, perhaps, with tools like Runway, we can usher in an era of en plein machine learning and, with it, unleash the creative potential of this technology for the benefit of the arts, as well as society as a whole.

Runway will be available for free, and it’s in beta now. You can learn more at runwayml.com

Many thanks to Dan Shiffman, Kathleen Wilson, Hannah Davis, Patrick Presto, and Scott Reitherman for their support in writing this post.

Notes

[1] From Books of Secrets to Encyclopedias: Painting Techniques in France between 1600 and 1800 — Historical Painting Techniques, Materials, and Studio Practice

[2] En plein Air — https://en.wikipedia.org/wiki/En_plein_air

[3] Never Underestimate the Power of a Paint Tube

https://www.smithsonianmag.com/arts-culture/never-underestimate-the-power-of-a-paint-tube-36637764/#lsrgxhpI13tUTkzg.99

[4] A Technique of the Modern Age: The History of Plein Air Painting — https://www.artelementsgallery.com/blogs/gallery-blog/104238278-a-technique-of-the-modern-age-the-history-of-plein-air-painting

[5] Insightful human portraits made from data — R. Luke DuBois — TED Talk: https://www.youtube.com/watch?v=9kBKQS7J7xI