December 11, 2025

by Runway

INTRODUCTION

GWM-1: our state-of-the-art General World Model, built to simulate reality in real time. Interactive, controllable and general-purpose.

Watch the Announcement

Today, we're announcing GWM-1—our first general world model family. GWM-1 is an autoregressive model built on top of Gen-4.5. It generates frame by frame, runs in real time, and can be controlled interactively with actions—camera pose, robot commands, audio.

GWM-1 comes in three variants: GWM Worlds for explorable environments, GWM Avatars for conversational characters, and GWM Robotics for robotic manipulation. Today, these are separate post-trained models. We're working toward unifying many different domains and action spaces under a single base world model.

We believe that world models are at the frontier of progress in artificial intelligence. Language models alone won't solve the world’s hardest problems – robotics, disease, scientific discovery. Real progress requires models that experience the world and learn from their mistakes, the same way that humans do. And this kind of trial and error can be massively accelerated when done in simulation, rather than in the real world. World models offer the most clear path to general-purpose simulation.

GWM Robotics is a learned simulator that generates synthetic data for scalable robot training and policy evaluation, removing the bottlenecks of physical hardware.

GWM Robotics is a world model trained on robotics data that predicts video rollouts conditioned on robot actions.

The model supports counterfactual generation, enabling exploration of alternative robot trajectories and outcomes.

The model supports counterfactual generation, enabling exploration of alternative robot trajectories and outcomes.

Synthetic data augmentation for policy training

Use the world model to generate synthetic training data that augments your existing robotics datasets across multiple dimensions, including novel objects, task instructions, and environmental variations. This synthetic data improves the generalization capabilities and robustness of your trained policies without requiring expensive real-world data collection.

Policy evaluation in simulation

Test the performance of your policy models (such as VLA models like OpenVLA or OpenPi) directly within Runway's world model instead of deploying to physical robots. This approach is faster, more reproducible, and significantly safer than real-world testing while still providing realistic behavioral assessments.

Gen-4.5

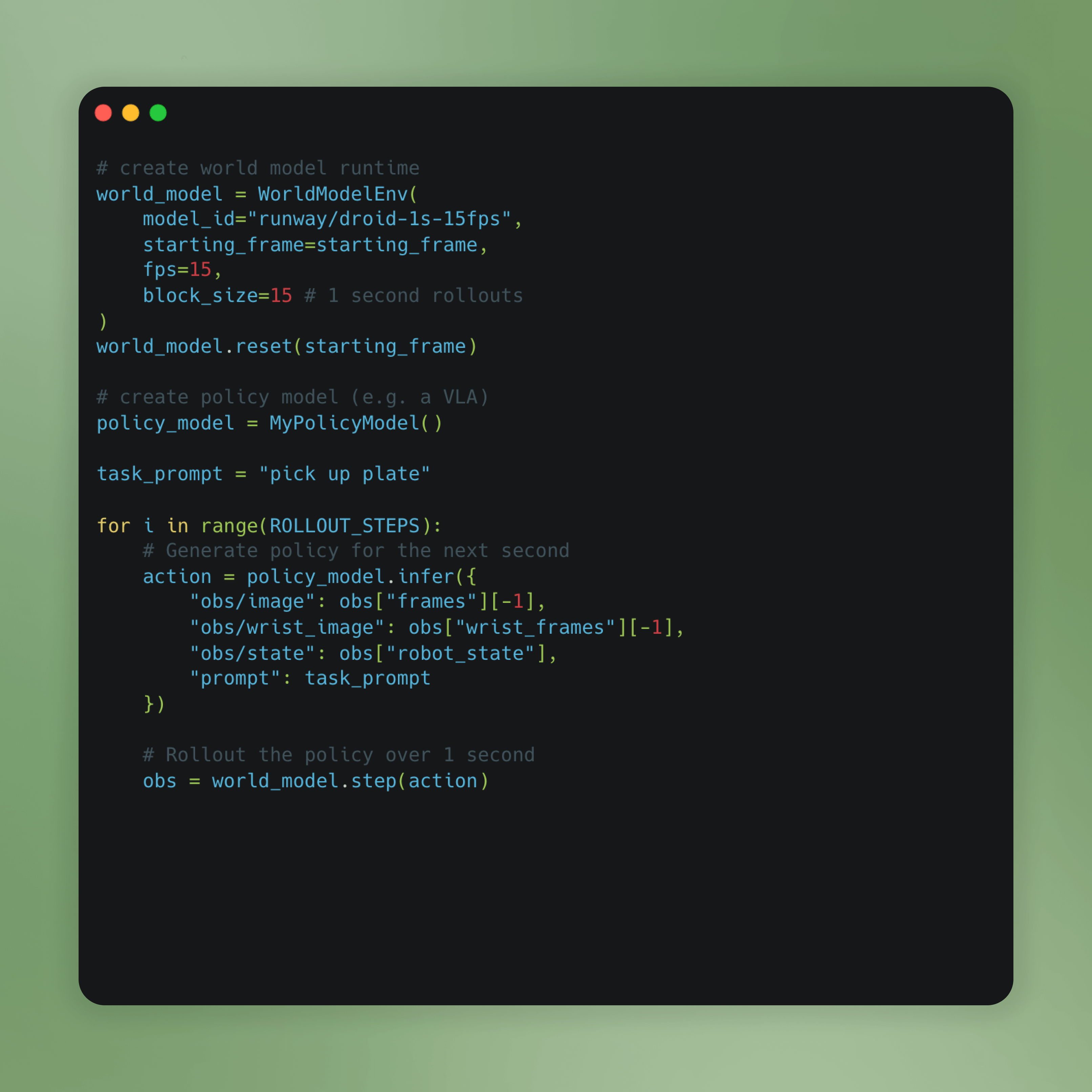

GWM-1 Robotics SDK

A Python SDK for Runway's robotics world model API that enables action-conditioned video generation using models trained on robotics data. The SDK supports multi-view video generation and long-context sequences, with an interface designed for seamless integration into modern robotic policy models.

A new frontier for open-ended interactive world simulation. A way of building infinite explorable realities in real-time.

Use cases

Gaming

Education

Training Agents

VR and Immersive Experiences

GWM Worlds enables players to move freely through coherent, reactive worlds without the need to manually design every space.

GWM Avatars is an audio-driven interactive video generation model that simulates natural human motion and expression for arbitrary photorealistic or stylized characters. The model renders realistic facial expressions, eye movements, lip-syncing and gestures during both speaking and listening, running for extended conversations without quality degradation.

Use cases

Real-time tutoring and education

Customer support and service

Training simulations

Interactive entertainment and gaming

Bring personalized tutors to life. Responsive characters that explain concepts, react to questions, and hold extended conversations with the natural expressions and gestures that make learning feel like a real dialogue.

GWM Avatars is coming soon to the Runway web product and Runway API for integration into your own products and services.

Gen-4.5 now supports native audio generation and native audio editing. Not only will you be able to generate novel videos with audio, but you'll also be able to edit the audio of existing videos to suit your needs. Gen-4.5 also introduces multi-shot editing. With multi-shot editing, you can make a change in your initial scene and propagate that change throughout your entire video.

Native Audio

Gen-4.5 can generate realistic dialogue compelling sound effects and immersive background audio, transforming the kinds of stories you can create with the model.

Audio Editing

Gen-4.5 has now the ability to edit the audio of existing videos to suit your needs in any particular way you want.

Multi-shot video editing

Gen-4.5 has the ability to edit videos of arbitrary length applying consistent transformations across multiple shots of arbitrary duration.

Change the background of the video to a jungle.

Change the skunk to a baby elephant.

Change the color of his eyes to red.

New Gen-4.5 capabilities are coming soon to the Runway web product.

Fill out this form to request GWM-1 early access