Announcing local GPU support

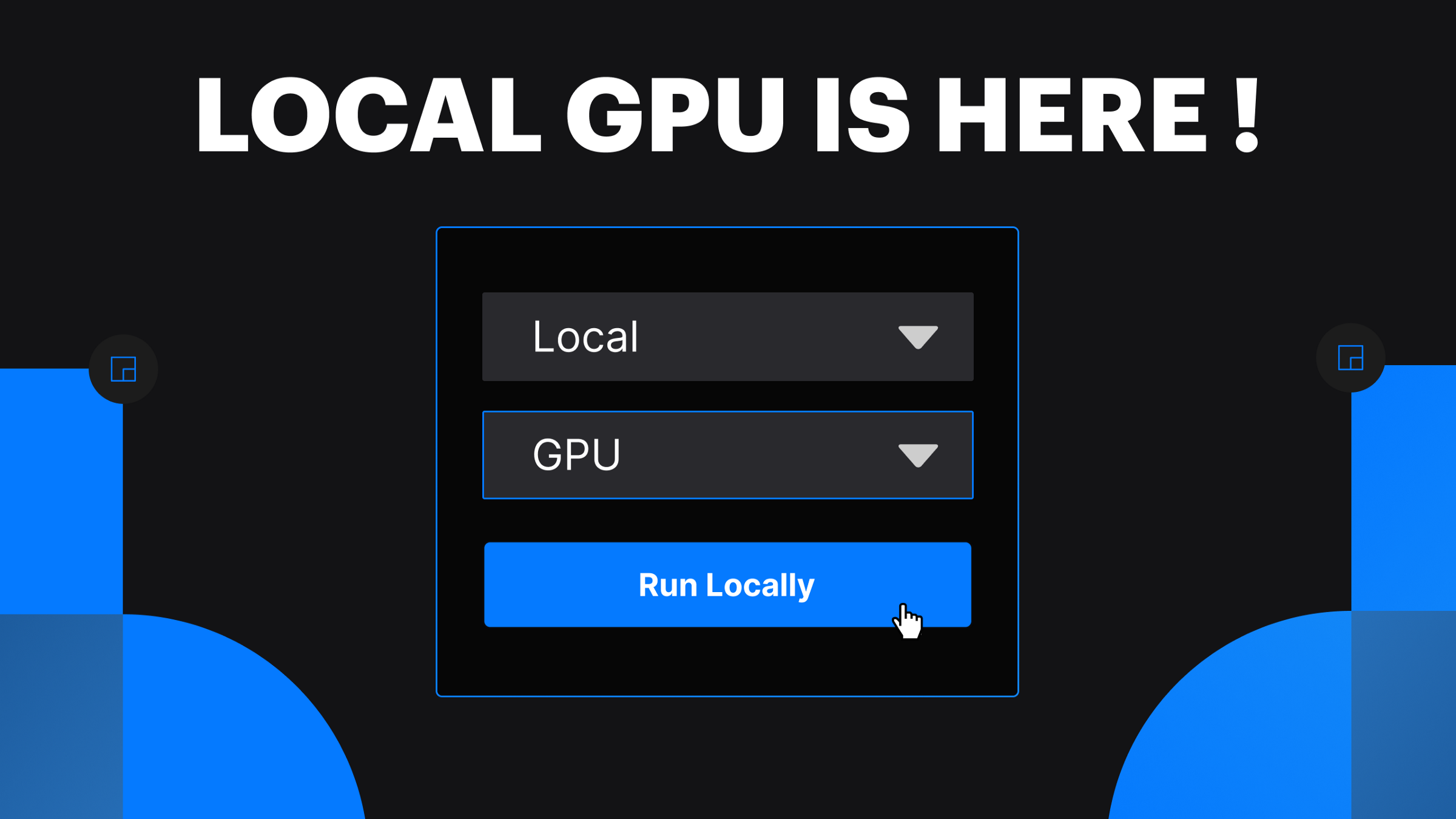

Most deep learning frameworks offer support for high-performance GPU accelerated training and inference, making neural networks run fast and smooth 🏄♀️. Today, we are super excited to announce that Runway supports GPU accelerated inference with your own GPU! This is still an experimental feature 🧪 (so expect things to change often). If you don’t have access to a Linux machine with a GPU, you can still run models with Runway’s built-in remote GPU mode. ✨

Read more to learn if your graphics card is supported in this release.

Supported Hardware

If you are running Linux and have an NVIDIA graphics card that supports CUDA, you can now run models using your own GPU hardware.

- You must be using Runway on Linux.

- You must have an NVIDIA GPU that supports CUDA.

- You must have

nvidia-dockerinstalled.

Benefits: Fast and secure

Running machine learning models locally means that you don’t have to rely on remote network connections, which can introduce latency. It also means that your data never leaves your computer, adding an extra layer of security and privacy to your files.

If you are using Runway for interactive and real-time applications, now is the time to give a performance boost to your projects!

Get Started

Check out these resources to learn how to get started using your own local GPU:

More updates coming soon!

Try the new model local GPU feature and let us know what you think! Download or update to the latest release: