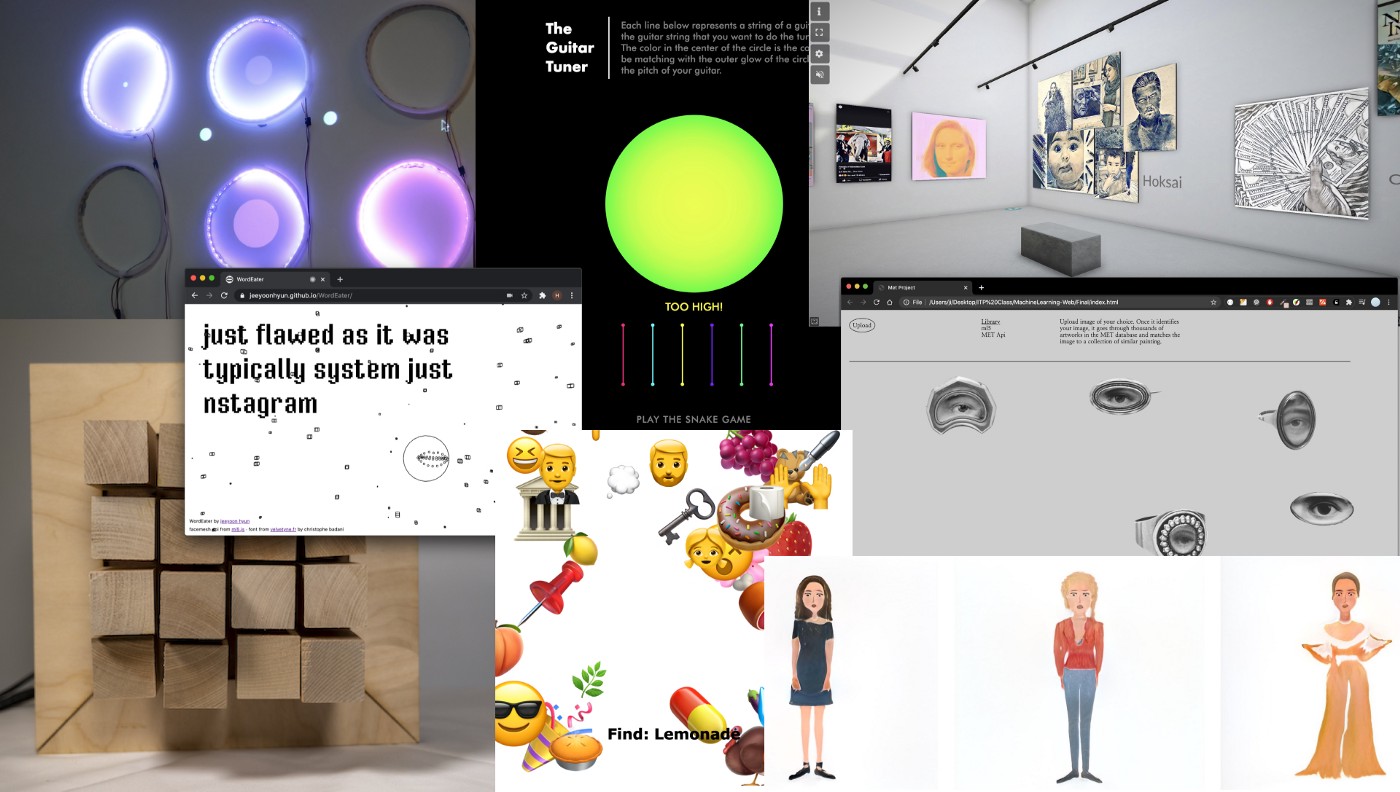

Creative Machine Learning Experiments Showcase

As the 2020 fall semester comes to an end, I’d like to share some projects that came out of the Machine Learning for the Web class at ITP/NYU. Machine Learning for the Web is a 14-week graduate course with a focus on building creative and interactive projects in the browser with machine learning techniques. During the class, we use tools like Runway, ml5.js, Teachable Machine, TensorFlow.js, p5.js, and Arduino in the creating process.

Guess the Emoji by KJ Ha

Guess the Emoji is an interactive game designed to stimulate logical thinking and agility through an entertaining interface. The objectives of the game are simple and straightforward. It is a reverse version of a conventional “Guess the Emoji” game, a fun guessing game in which the player guesses a certain word based on a shown set of emojis. In this game, the players’ job is to use their noses to find the two emojis that make up the presented word.

Live Example | Built with ml5.js, PoseNet, p5.js.

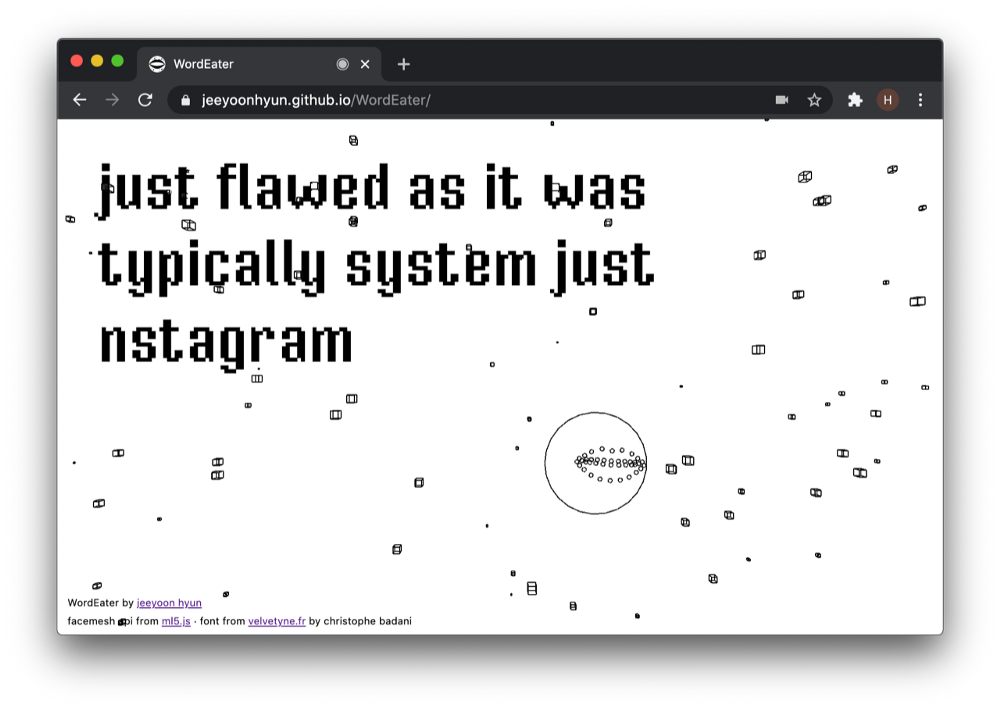

WordEater by Jeeyoon Hyun

WordEater is a mini-game where you can use your webcam to gobble up words in order to generate a sentence.

It is a browser-based game that lets you gobble up a bunch of meaningless words in order to make another meaningless sentence, eventually removing all words that you see on the screen.

It doesn’t matter if you don’t understand what the words or sentences are trying to say — after all, they are going to be swallowed and eaten anyway. All you need to do is get some peace of mind by consuming all the disturbing, shattered pieces of information that make complete nonsense. The goal of the game is to make your web browser cleaner by scavenging fragmented data with your mouth. After all, your web browsers also need some refreshment from the gibberish they encounter every day!

WordEater uses the Facemesh API in ml5.js to detect your mouth in your webcam. You can play the mouse version if you can’t use your webcam — for example, if you are wearing a mask.

Live Example | Built with ml5.js, Facemesh, p5.js

Pixel Topographies by Philip Abbott Cadoux

Pixel Topographies uses machine learning to generate elevation maps based on Connecticut topography, then creates a tangible 3D representation of that data.

My family sold the house I grew up in this year, which was very sudden, but for the best. I had never really been that connected to my home state, but when I discovered I may never go back there, I realized that I had come to really appreciate it as a place to grow up. We ended up renting a house not too far from where I grew up to get through the pandemic, but it made me think about what it was that caused me to become so nostalgic. What kept popping into my head was that it is a beautiful place. It has lovely forests, beautiful colors, coastal towns, and even a few mountains. Then, I stumbled across a site which shows the elevation in CT using colors.

This inspired me, I created an ElevationGAN and used Runway’s hostel model feature to grab the images generated. I then used p5.js to process down the images into a pixel grid. From there, I used serial communication to send the elevation data to an Arduino, which actuated pixels in and out to reflect these values. This is a proof of concept piece that could be scaled up to create 1:1 representations of elevation maps — ultimately creating wooden topographies. I have plans to elaborate on this ML model and try new things.

Built with Runway, Javascript, p5.js, Arduino, 3D printer, Woodworking, StyleGAN.

Music Rings by Sihan Zhang

Music Rings is an interactive interface for musical performance. It allows users to control music and lights with their hand movements.

The installation consists of six rings, each of which is made up of a 70 cm long LED strip and a piece of foam board. All the rings are stuck on a wall and controlled by Arduino. A p5 sketch is projected on the wall and mapped to the rings. A webcam is placed below the rings to track the performer’s hand positions using PoseNet. When the performer’s hand moves inside a ring, the corresponding LED strip will light up and a music clip will be played. When the performer’s hand moves out from the ring and then goes inside again, the LED strip will turn off and the music will stop.

Built with ml5.js, p5.js, PoseNet, Arduino.

MET painting match project By Ji Park

This web app identifies user uploaded image and match with painting from the MET.

Using ml5 image classifier and MET open API, this website identifies user uploaded image and matches the image to a collection of similar painting from the MET database.

Live Example | Built with ml5.js, ObjectDetection(coco-ssd), MET API

The Guitar Tuner + The Snake Game by Michelle Sun (Pei Yu)

An online tuning tool for guitar / a snake game that you play with your guitar.

The Guitar Tuner is an online tool that helps the user to tune their guitar. It was always troublesome when it came to tuning the guitar, due to the small size of the guitar tuner making it easy to be lost. Each line represents a string of a guitar. Choose the guitar string that you want to do the tunning with. It recognizes the tones of the guitar’s six strings and will suggest the user either tune it to a higher or lower pitch. The color in the center of the circle is the color you will be matching with the outer glow of the circle by using the pitch of your guitar. The visual of this tuning tool was inspired by James Turrell’s art.

After tuning the guitar, press “play the snake game” to play the game with your tuned guitar! This is a snake game that uses the first four strings of a guitar to control the direction of the snake. I’ve also made a ukulele version for both the Tuner and the Snake Game

Live Example | Built with ml5.js, p5.js, PicthDetection

The case for augmented virtual interfaces in the postcode By Bilal Sehgol

As a technology entrepreneur in New York City. I find the market experiences very boring! However today with technology we can augment experiences and make them exciting using technology. Augment Marketspaces in the postcode are possible including a virtual exhibition of GAN art I made for my machine learning and neural aesthetics class at NYU.

More than a Peapod style “virtual store”but an entire market space foradrollto place ads and collect metadata of a pigeon easy experience assisted by interactive chatbots! For instance, for the sales exhibition of GAN art, I made this semester: Experience anAugmented Art Marketplace (click art pieces to interact or find more information on models used.)

Live Example | Built with ml5.js, ml4a, Runway

Runway Educations Program 👩🏫

Are you interested in using Runway in your classroom? Runway has dedicated plans for educators, which include discounted educational licenses, resources, and teaching materials. Please let us know which feature of Runway are you most interested in using here. You can also check out some of our learning resources here: Learning Guides and Video Tutorials.

Sharing your experiment made with Runway 🌈

Have you created any experiments with Runway? If so, we’d very much love to hear from you and feature your experiments on our website! You can share your projects by filling out this form.

Thank you for reading! Questions? Comments? Send us a note here.