GAN Mixing Console

My name is Roy Macdonald. I am a Chilean artist/researcher/coder. Here is my portfolio.

Between October 2019 and February 2020 I spent my time in New York City, as a Something-In-Residence at Runway.

Context

Generative Adversarial Networks (GAN) have become very popular recently, particularly StyleGAN, as a method for creating photo-realist synthetic images, which are particularly good with human faces. Latent space interpolation, a technique for visualizing some of the options available from what the GAN has learned has become quite a common visualization method.

GAN Latent Space Interpolation

This video shows Latent Space Interpolation using StyleGAN.

Understanding

When looking at how this technique works I realized that it was simply using Perlin noise -which is a very common algorithm used in computer graphics for generating pseudo-random numbers that smoothly change from one to the next- to smoothly change each of the 512 parameters that these GANs have.

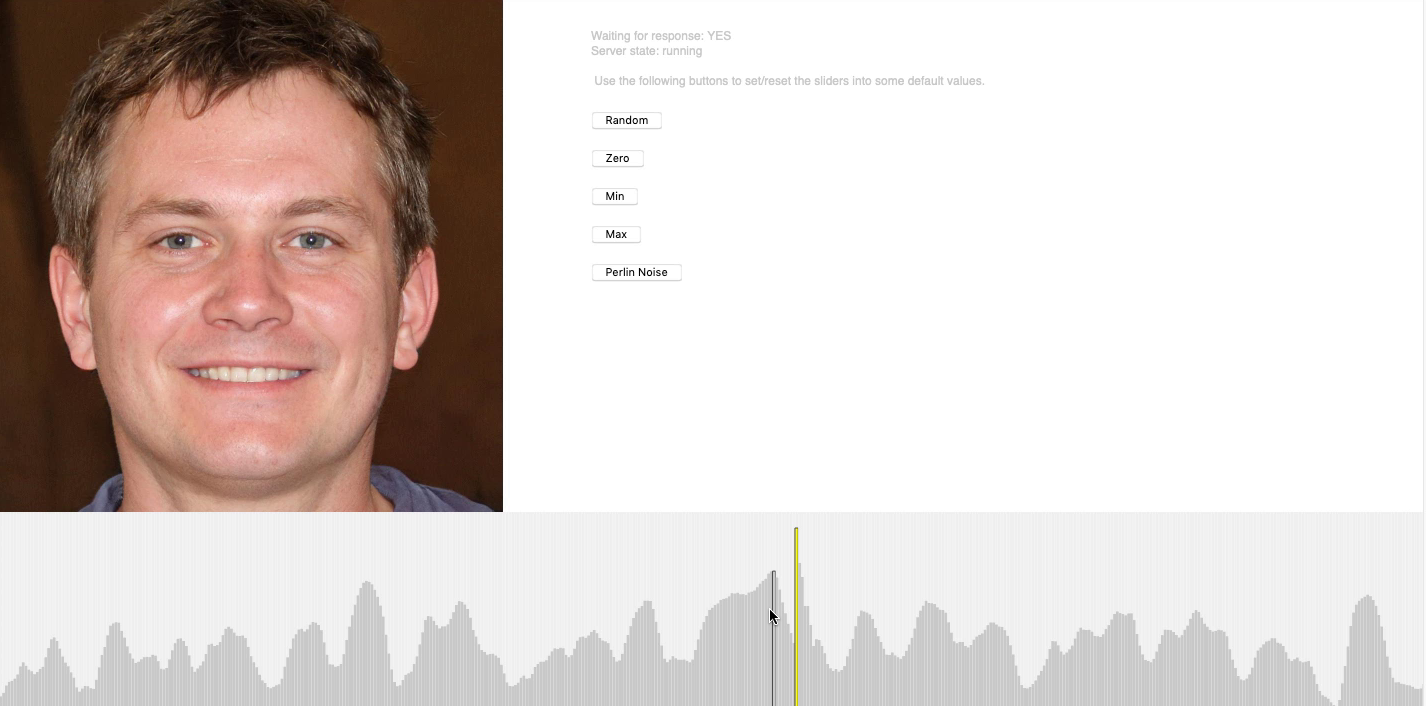

As I wanted to play with these parameters I though of controlling them with something like a sound mixing console, with 512 vertical faders, where each controls a single parameter of the GAN.

Experiment

I initially made this experiment using Runway and p5js. Then, I ported it to Runway’s Hosted Models feature, which allows you to use your Runway’s models inside websites. It is really nice. This is what allows me to have this experiment embedded in a web page. :D

Conclusion

At the time I did this and showed it to the people at Runway, most said that they had not seen an interface like this for navigating the latent space and they liked it very much. As far as I know, it had not been done.

This interface allows you to see in much better detail how a model works, and you can see that each parameter does not control a specific feature but rather a more undefined one. Yet, after fiddling with it for a bit you will find patterns of changes and certain areas in the faders that controlled a specific feature (I remember having found one that, when changed it added or removed glasses from the resulting image). Usually, you need to move together with a few adjacent sliders in order to have a larger effect over the image generation, yet sometimes you can get quite dramatic changes by slightly moving a single one. Go on and explore it!

Another thing you can perceive, are the limits of machine learning in the form of visual artifacts. The model does not really understand what a human face (or whatever thing you used for training it), it simply holds statistical values about how all images of the training data are related. As such you can see strange things happening, like almost appearing earrings or eyeglasses. The model does not know what an earring or eyeglass is and that it needs to be able to exist in real space, instead, it just has the ability to infer what an image should look like given the training data and the parameters used for producing it, which means that the laws of real-world space don't need to apply.

Using Javascript proved to be really nice for experiments like this one as it required very short code, as all the requests to Runway are made through an HTTP request, which is native to Javascript as well as the response format, JSON, so there was no need to parse or use special libraries for such. You can inspect the Javascript code that drives this experiment here

I hope that this small tool allows you to both have fun and get a better understanding of how GANs work.

I also did some other experiments while being a Resident at Runway which you can read here. 🙂

Originally published at https://roymacdonald.github.io.