What’s My Line? Next Sentence Prediction in RunwayML with BERT

I spent a few Fridays recently working as an artist-in-residence with RunwayML, a company building software to make heavy-duty machine learning (ML) tech easier to use in creative projects. I’m pretty much their ideal customer: someone with an interest in creative ML but no GPUs, a small computer and a punishing fear of The Cloud.

I’ll focus on language models, which represent a small but growing number of those available in RunwayML. I’ll describe a situation (next sentence prediction) where I think large ML language models are interesting, then talk through a couple of proof-of-concept experiments in RunwayML.

What are language models good for?

A language model is a set of rules for evaluating the likelihood of sequences of text. Language models have many uses, including generating text by repeatedly answering the question: Given some text, what could come next?

If you’re designing a program to suggest the next word in a sentence, you can get pretty far with simple algorithms based on counting n-grams, sequences of words as found on the web, or in a book, or any corpus you choose.

An n-gram strategy to predict the next word in the line “Oops, I did it” might look at its last two words (“did it”) and cross-reference them with counts of all three-word sequences starting that started with “did it”. The algorithm’s top suggestions are all the third words in those sequences: words like “again” and “all” and “to” and “for” and “hurt”, depending on the training data.

But say you want to use more than the last couple words. Say you want to suggest lines based on the whole previous line.

Then the counting approach won’t do. Short sequences of words like “did it” are common enough that we can easily find examples of them in the wild, including what came after. But sequences longer than a few words tend to be rare.

Using exact occurrences, suggestions for lines to follow the line “Oops, I did it again” would be pretty thin. Even if the training data included all songs ever written, you’d be limited to lines from Britney Spears’s “Oops!… I Did It Again” plus later references to that song.

That’s not so satisfying — more like rote regurgitation than creation. Wouldn’t it be better if you could ask not what sentences best follow this exact sentence, but what sentences best follow this kind of sentence?

BERT For Next Sentence Prediction

BERT is a huge language model that learns by deleting parts of the text it sees, and gradually tweaking how it uses the surrounding context to fill in the blanks — effectively making its own flash cards from the world and quizzing itself on them billions of times.

I’ve always been intimidated by BERT’s unwieldiness. It takes up a lot of memory and takes far more compute to run than a lightweight Markov model. But I thought it could open the door to interesting line-by-line songwriting suggestions, so during my residency at RunwayML I ran a couple of experiments to see what kinds of line suggestions I could get from it.

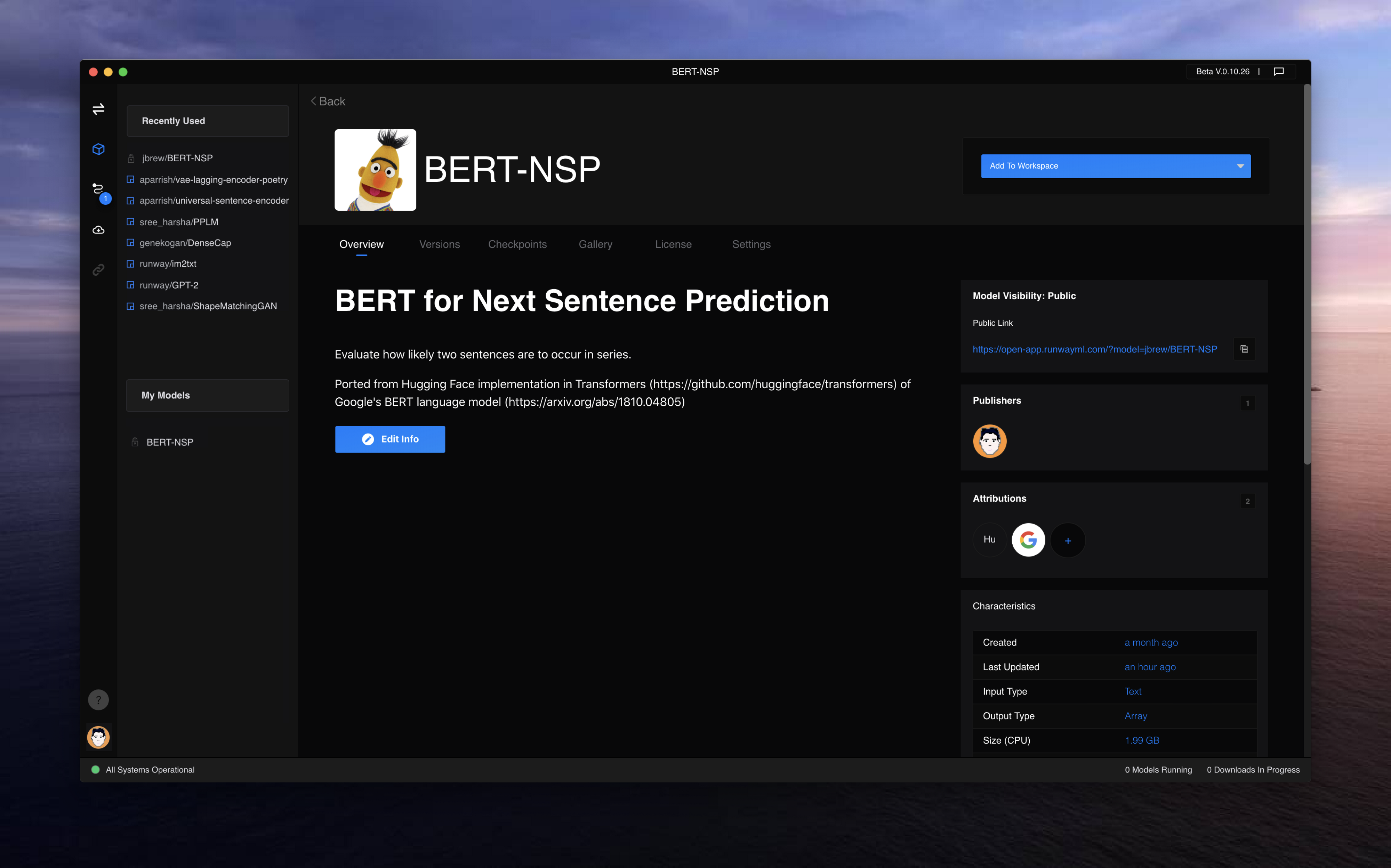

RunwayML takes care of the compute resources for large models, which it hosts remotely. To get BERT running, I first had to “port” a version of the model to RunwayML. This meant creating a Github repo with two key files: a list of what the remote machine will need to install to run the model, and a script to run the model itself. Here’s a tutorial post that explains the porting process.

I chose to port BertForNextSentencePrediction, a version of BERT from the NLP startup HuggingFace that does just what it says on the tin: given two sentences, estimate how likely the second is to follow the first.

Here’s what the repo looks like. And here’s the code for the crucial script, runway_model.py: